Marina del Rey

Sitting by the lagoon in Marina del Rey, I sipped a Pilsner Urquell and watched the planes at LAX, two taking off followed by two landing in an infinite repetition. In addition to the world’s largest man-made small boat harbor, Marina del Rey is the home of the Information Sciences Institute (ISI), a research group that has played a key role in the development of the Internet.

Monday morning, I got in my car to find ISI. Since the Institute is part of the University of Southern California, I expected to arrive at some ramshackle house on the edge of campus, but instead found myself on the 11th floor of a modern office building, right on the edge of the harbor.

With 200 staff members and most of its funding from the Defense Advanced Projects Research Agency (DARPA) and other government agencies, ISI plays an important catalyst role in several areas. The MOSIS service, for example, is a way for researchers to get small quantities of custom VLSI circuits. MOSIS gangs together designs from many researchers onto one mask, breaking fabrication costs down to manageable levels.

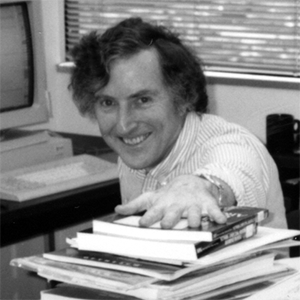

My goal, though, was not to learn about MOSIS but to try and learn more about the many networking projects ISI undertakes. First stop was to find Dr. Jon Postel, Director of ISI’s Communications Division. Jon was reading his mail when I came in, a never-ending task when you get over 100 messages per day. He looked up from his two-finger typing and gave me a warm welcome, evidence of his long residence in California.

Postel is one of the best-known figures in networking. The editor of the RFCs titled “Internet Protocol” and “Transmission Control Protocol,” he is certainly the person most often cited in the footnotes at the back of research papers. In addition to writing key protocols, Postel is the editor of the Request for Comment (RFC) series, and has thus been responsible for the documentation of the TCP/IP protocol suite.

Jon had set up a demanding schedule which we promptly shot to hell. All the projects were so interesting, I kept dallying to find out more. Even then, I saw only a fraction of what ISI had to offer, not even getting to talk to people like Clifford Neuman, codeveloper of key network services such as Prospero and Kerberos.

We started talking about the networks to which ISI belongs. Several years ago, Postel sent a mail message around proposing that a network, now called Los Nettos, be started. The very same day, Susan Estrada of the San Diego Supercomputer Center proposed the establishment of CERFnet.

Both networks went forward and they illustrate two different, yet complementary approaches to providing network service. Jon describes Los Nettos as a “regional network for Blue Chip clients.” Los Nettos connects 9 big players, including UCLA, Caltech, TRW, and the RAND Corporation. The network assumes that the clients are self sufficient and thus has little overhead. As Jon puts it, “we have no letterhead and no comic books.”

By contrast, CERFnet is a full-service network provider, one of the most aggressive and best-run mid-level networks. CERFnet has aggressively pursued commercial customers and helped to found the Commercial Internet Exchange (CIX). CERFnet provides a wide range of user services, including comic books, buttons for users, and other introductory materials.

Both models are necessary and the two networks interconnect and work well together. Tl lines from UCLA and Caltech down to the San Diego Supercomputer Center give Los Nettos dual paths into the NSFNET backbone. Having the two types of service providers gives customers a choice of the type of service they need.

ISI is also a member of two other networks, DARTnet and the Terrestrial Wideband (TWB) network, both experimental systems funded by DARPA. TWB is an international network, connecting a variety of defense sites and research institutes, including UCL in London.

TWB is a semi-production network used for video conferences and simulations. The simulations, developed by BBN, consist of activities such as war games with tanks, allowing tank squadrons in simulators across the country to interact with each other so commanders can experiment with different strategies for virtual destruction.

DARTnet, by contrast, is an experimental network used to learn more about things like routing protocols. To learn more about DARTnet, I walked down the hall to find Robert Braden, executive director of the IAB. Braden was buried behind mountains of paper, including a formidable pile of RFCs.

DARTnet, by contrast, is an experimental network used to learn more about things like routing protocols. To learn more about DARTnet, I walked down the hall to find Robert Braden, executive director of the IAB. Braden was buried behind mountains of paper, including a formidable pile of RFCs.

DARTnet connects eight key sites such as BBN, ISI, MIT, and Xerox PARC. Braden described the network as “something researchers can break.” Although applications such as video conferencing run on the network, the main purpose is experimentation with lower layer protocols.

Each of the DARTnet sites run Sun computers as routers. Periodically, network researchers, such as the elusive Van Jacobson, modify the code in those routers to experiment with new routing or transport protocols.

One of the key research efforts is supporting different classes of traffic on one network. Video, for example, requires low delay variance in the data stream, a requirement not necessarily compatible with large file transfers.

DARTnet researchers are examining ways that resource reservation can be accommodated in a general-purpose network. Connection-oriented protocols, such as ST, share the underlying infrastructure with the connectionless IP protocol. IP multicasting and other newer innovations are also implemented in the network, allowing bandwidth-intensive video to be sent to several sites without necessarily duplicating the video stream.

The next stop on my tour was a visit with Gregory Finn and Robert Felderman, two of the developers of ATOMIC, an intriguing gigabit LAN under the direction of Danny Cohen. ATOMIC is based on Mosaic, a processor developed at Caltech as the basis for a massively parallel supercomputer.

One of the requirements for a message-based, massively parallel system is a method for passing messages from one processor to another. Mosaic had built this routing function into the processor. ISI decided to use that same chip as the basis for a LAN instead of a supercomputer.

The prototype Gregory and Robert showed me consisted of three Suns connected together with ribbon cables. Each Sun has a VME board with four Mosaic chips and two external cables. It is interesting to note that each Mosaic chip runs at 14 MIPS, making the LAN interface board significantly more powerful than the main processor.

The four channels on the board have a data throughput rate of 640 Mbps. Even on this rudimentary prototype, 1,500-byte packets were being exchanged at a throughput rate of over 1 Gbps. Small packets were being exchanged at the mind-boggling rate of 3.9 million per second.

The system I saw simply had host interface boards connected directly to each other. The same Mosaic chip is being used as the basis for a LAN switch, an architecture that seems to provide a viable alternative to the increasingly complex ATM switches.

The Mosaic-based supercomputer consists of arrays of 64 chips, configured on boards 8 square. The ATOMIC project was going to use those arrays as the basis for a switch, connecting host interfaces (and other grids) to the edge of the array.

Messages coming into the grid would be routed from one Mosaic chip to another, emerging at the edge of the grid to go into a host interface or another grid. Source routing headers on the messages were designed to take advantage of the hardware-based routing support in the processors. In effect, 896 MIPS of processing power on a grid would sit idle while the routing hardware moved messages about.

What was so intriguing about ATOMIC was the simplicity and elegance of the architecture. While ATM switches had become ever more complex in order to support more users or higher speeds, this appeared to be an architecture that could scale evenly.

Even more intriguing was cost. The individual Mosaic chips and the 8 by 8 grids were being made in large quantities for the Caltech supercomputer and costs would descend to very low levels if ATOMIC ever went into production. The tinker-toy approach to connecting grids together meant that a LAN switch could easily grow at small incremental costs.

ATOMIC is implemented as a LAN under the Berkeley sockets interface, allowing TCP/IP to run over the network. There is no reason, however, that ATOMIC couldn’t be made to send fixed-length packets of 54 bytes, making the technology well-suited to ATM and B-ISDN, as well as more flexible packet-based architectures.

With only a few researchers, ISI showed that gigabit switches didn’t necessarily have to involve huge development teams or complex designs. By piggybacking on the Mosaic effort, the ISI researchers quickly prototyped a novel solution to a difficult problem.

Just down the hall from the laboratory housing the ATOMIC project is the ISI teleconferencing room. Multimedia conferencing has always been a highly visible effort at ISI, and I met with Eve Schooler and Stephen Casner, two key figures in this area, to learn more about it.

Traditional teleconferencing uses commercial codecs (coder-decoders, sort of a video equivalent to a modem) over dedicated lines. ISI, along with BBN, Xerox, and others, has been involved in a long-term effort to use the Internet infrastructure to mix packet audio and video along with other data.

The teleconferencing room at ISI is dominated by a large-screen Mitsubishi TV split into four quadrants. In the upper left, I could see Xerox PARC, in the lower right, the room I was in. In the Xerox quadrant, I could see Stephen Deering, a key developer of IP multicasting, holding a meeting with some colleagues. In the upper right hand quadrant, I could see the frozen image of a face from MIT left over from the previous day.

Working on multimedia networking began as early as 1973 when ARPANET researchers experimented with a single audio channel. To get more people involved, Bob Kahn, then director of DARPA’s Information Processing Techniques Office, started a satellite network. In time, this network evolved into the Terrestrial Wideband (TWB) network.

Over the years, the teleconferencing effort settled into a curious mix of production and research. Groups like the IAB and DARPA used the facilities on a regular basis to conduct meetings, with TWB logging over 360 conferences in the past three years.

On the research side, people like Eve Schooler and Steve Casner were experimenting with issues as diverse as the efficient use of underlying networks to high-level tools used by meeting participants.

At the low level, one of the big constraints is raw bandwidth. Video channels can take anywhere from 64 to 384 kbps, depending on the brand of codec and the desired picture quality. Codec researchers are examining strategies to squeeze better pictures out of the pipes, ranging from better compression to clever techniques such as varying the amount of data sent depending on the amount of motion.

Teleconferencing, because of high codec costs, had been based on connecting special, dedicated rooms together. With the cost of codecs coming down and even getting integrated into workstations, the focus is rapidly switching to office-based group applications, allowing people to sit at their desks in front of their multimedia workstations.

While desk-based video was still a ways down the pike, audio was maturing rapidly. ISI has been working with people such as Simon Hackett in Australia to come up with a common protocol for moving audio around the network.

After this whirlwind morning of research projects, Jon Postel took me to lunch and I returned to learn more about the key administrative role ISI plays in the Internet.

First stop was the famous Joyce K. Reynolds, Postel’s right arm. In addition to announcing new RFCs to the world, Joyce manages the Internet Assigned Numbers Authority (IANA), the definitive registry of constants like TCP port numbers, SNMP object identifiers, and Telnet options. IANA included all administered numbers except for Internet addresses and autonomous system numbers, an area in which ISI acts as a technical advisor to the NIC.

Although Joyce’s job in RFC processing and IANA functions would seem to take all her time, she also manages the IETF User Services area. For several years, she had been pushing the IETF to try and make using the network as important a priority as making it run. Finally, User Services got elevated to a real “area,” and Joyce led the effort to publish tutorial documents and introductory guides.

Right next to Joyce’s office is that of Ann Cooper, publisher of the Internet Monthly Report containing summaries of X.500 pilots, IAB meetings, IETF and IRTF meetings, gigabit testbeds, and other key activities.

One of Ann Cooper’s functions is administrator of the U.S. domain. While most U.S. names are in the commercial (.COM) or educational (.EDU) name trees, the rest of the world uses a country-based scheme. The U.S. domain is an effort to bring the U.S. up to speed with the rest of the world.

My own domain name is Carl@Malamud.COM. While this is nice for me, placing myself this high on the name hierarchy starts to turn the Domain Name System, in the words of Marshall T. Rose, “from a tree into a bush.” In fact, Rose, being a good citizen of the network, has registered himself under the mtview.ca.us subdomain.

While most U.S. members of the Internet are still under the older .COM, .GOV, and .EDU trees, the .US domain is beginning to catch on. In fact, enough people want to register that ISI has delegated authority for more active cities to local administrators. Erik Fair, for example, the Apple network administrator, has taken over the task of administering names for San Francisco.

My day at ISI was finished just in time to go sit in traffic. I had proved myself to be a non-native of California by booking my flight in the wrong airport, so I wheeled my car past three Jaguars heading into the Marina and started the 3-hour drive south to San Diego, watching the snow-capped mountains poking their heads over the Los Angeles basin fog.